Showing

- content/cloud/advanced-features/images/router3.png 0 additions, 0 deletionscontent/cloud/advanced-features/images/router3.png

- content/cloud/advanced-features/images/router4.png 0 additions, 0 deletionscontent/cloud/advanced-features/images/router4.png

- content/cloud/advanced-features/images/router5.png 0 additions, 0 deletionscontent/cloud/advanced-features/images/router5.png

- content/cloud/advanced-features/images/router6.png 0 additions, 0 deletionscontent/cloud/advanced-features/images/router6.png

- content/cloud/advanced-features/index.md 297 additions, 0 deletionscontent/cloud/advanced-features/index.md

- content/cloud/best-practices/images/accessing-vms-through-jump-host-6-mod.png 0 additions, 0 deletions...ractices/images/accessing-vms-through-jump-host-6-mod.png

- content/cloud/best-practices/index.md 226 additions, 0 deletionscontent/cloud/best-practices/index.md

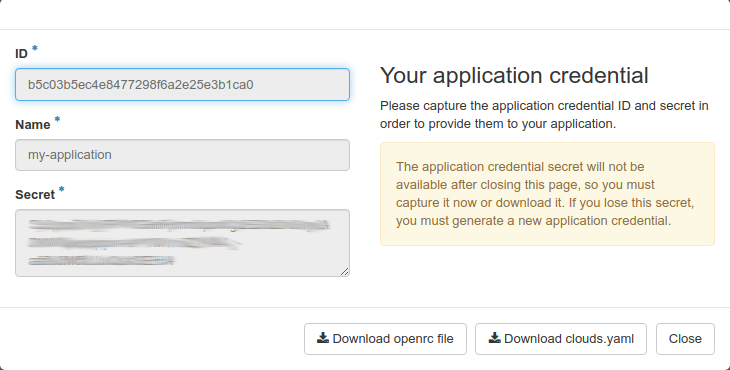

- content/cloud/cli/images/app_creds_1.png 0 additions, 0 deletionscontent/cloud/cli/images/app_creds_1.png

- content/cloud/cli/images/app_creds_2.png 0 additions, 0 deletionscontent/cloud/cli/images/app_creds_2.png

- content/cloud/cli/index.md 281 additions, 0 deletionscontent/cloud/cli/index.md

- content/cloud/contribute/index.md 75 additions, 0 deletionscontent/cloud/contribute/index.md

- content/cloud/faq/index.md 183 additions, 0 deletionscontent/cloud/faq/index.md

- content/cloud/flavors/index.md 73 additions, 0 deletionscontent/cloud/flavors/index.md

- content/cloud/gpus/index.md 25 additions, 0 deletionscontent/cloud/gpus/index.md

- content/cloud/image-rotation/index.md 7 additions, 0 deletionscontent/cloud/image-rotation/index.md

- content/cloud/network/images/1.png 0 additions, 0 deletionscontent/cloud/network/images/1.png

- content/cloud/network/images/2.png 0 additions, 0 deletionscontent/cloud/network/images/2.png

- content/cloud/network/images/3.png 0 additions, 0 deletionscontent/cloud/network/images/3.png

- content/cloud/network/images/4.png 0 additions, 0 deletionscontent/cloud/network/images/4.png

- content/cloud/network/images/5.png 0 additions, 0 deletionscontent/cloud/network/images/5.png

18.6 KiB

29.6 KiB

30.1 KiB

55.9 KiB

content/cloud/advanced-features/index.md

0 → 100644

content/cloud/best-practices/index.md

0 → 100644

content/cloud/cli/images/app_creds_1.png

0 → 100644

109 KiB

content/cloud/cli/images/app_creds_2.png

0 → 100644

60.1 KiB

content/cloud/cli/index.md

0 → 100644

content/cloud/contribute/index.md

0 → 100644

content/cloud/faq/index.md

0 → 100644

content/cloud/flavors/index.md

0 → 100644

content/cloud/gpus/index.md

0 → 100644

content/cloud/image-rotation/index.md

0 → 100644

content/cloud/network/images/1.png

0 → 100644

10 KiB

content/cloud/network/images/2.png

0 → 100644

5.35 KiB

content/cloud/network/images/3.png

0 → 100644

25.9 KiB

content/cloud/network/images/4.png

0 → 100644

46.7 KiB

content/cloud/network/images/5.png

0 → 100644

24.7 KiB