Showing

- content/cloud/network/images/allocate-fip.png 0 additions, 0 deletionscontent/cloud/network/images/allocate-fip.png

- content/cloud/network/images/associate-fip.png 0 additions, 0 deletionscontent/cloud/network/images/associate-fip.png

- content/cloud/network/images/attach_interface.png 0 additions, 0 deletionscontent/cloud/network/images/attach_interface.png

- content/cloud/network/images/clear-router1.png 0 additions, 0 deletionscontent/cloud/network/images/clear-router1.png

- content/cloud/network/images/f1.png 0 additions, 0 deletionscontent/cloud/network/images/f1.png

- content/cloud/network/images/instance1.png 0 additions, 0 deletionscontent/cloud/network/images/instance1.png

- content/cloud/network/images/ipv6_attach.png 0 additions, 0 deletionscontent/cloud/network/images/ipv6_attach.png

- content/cloud/network/images/network_floating_ip.png 0 additions, 0 deletionscontent/cloud/network/images/network_floating_ip.png

- content/cloud/network/images/network_routers-group.png 0 additions, 0 deletionscontent/cloud/network/images/network_routers-group.png

- content/cloud/network/images/network_secutity_groups_egress.png 0 additions, 0 deletions...t/cloud/network/images/network_secutity_groups_egress.png

- content/cloud/network/images/r1.png 0 additions, 0 deletionscontent/cloud/network/images/r1.png

- content/cloud/network/images/r2.png 0 additions, 0 deletionscontent/cloud/network/images/r2.png

- content/cloud/network/images/r3.png 0 additions, 0 deletionscontent/cloud/network/images/r3.png

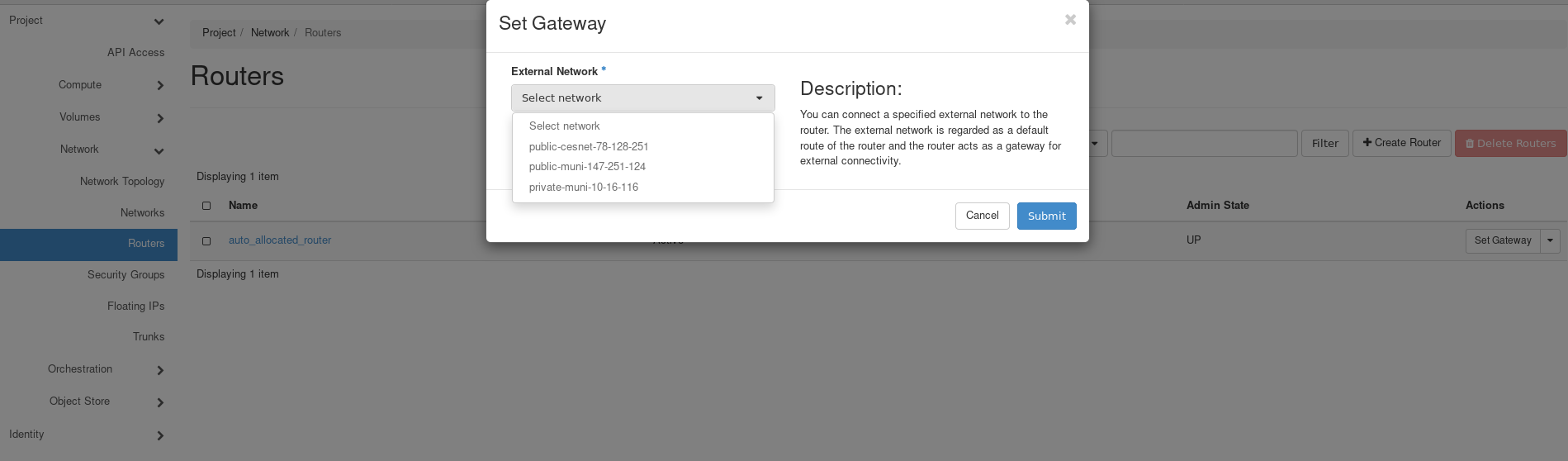

- content/cloud/network/images/set-router1.png 0 additions, 0 deletionscontent/cloud/network/images/set-router1.png

- content/cloud/network/images/set-router2.png 0 additions, 0 deletionscontent/cloud/network/images/set-router2.png

- content/cloud/network/index.md 407 additions, 0 deletionscontent/cloud/network/index.md

- content/cloud/news/index.md 70 additions, 0 deletionscontent/cloud/news/index.md

- content/cloud/project-expiration/index.md 11 additions, 0 deletionscontent/cloud/project-expiration/index.md

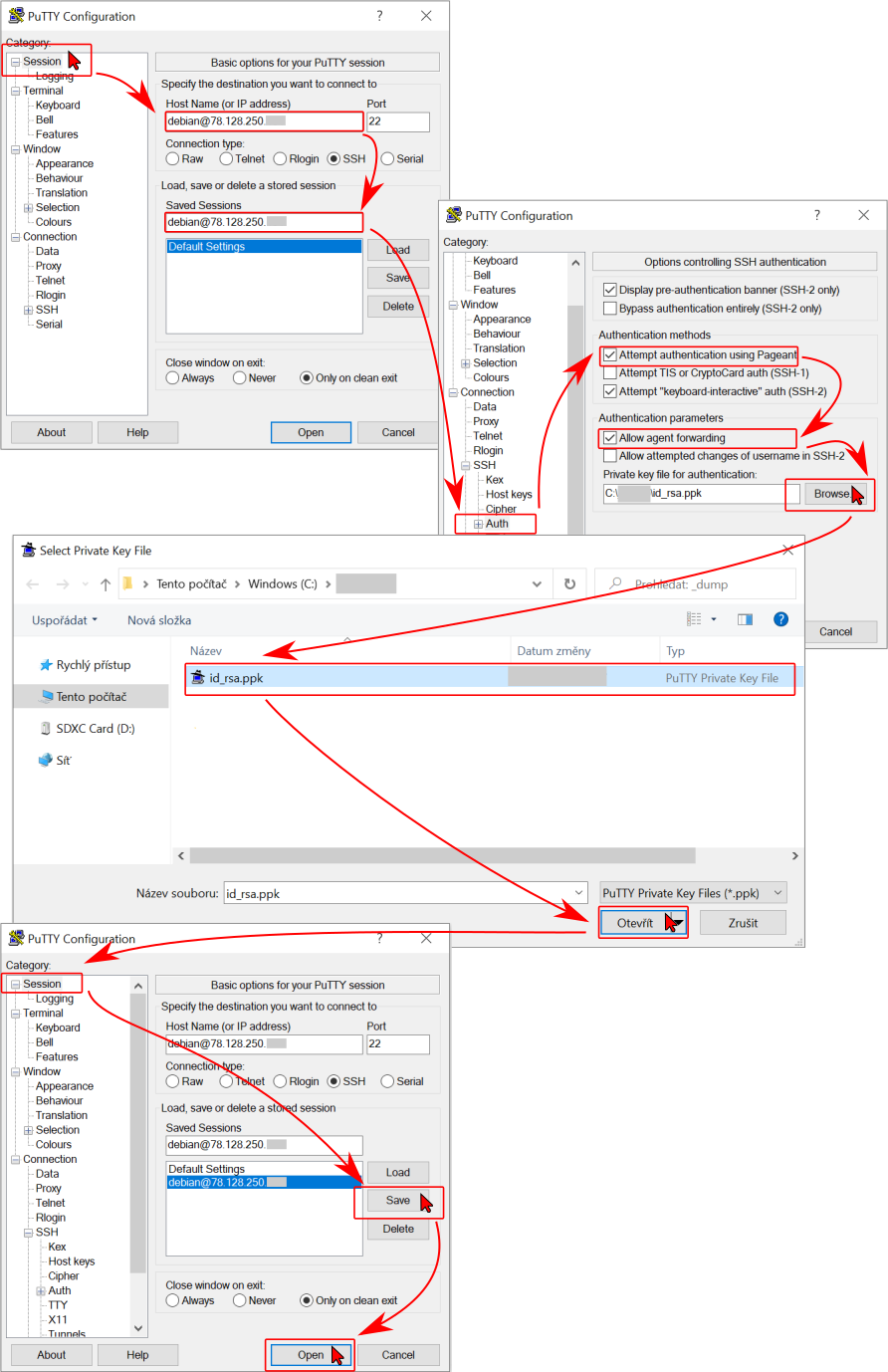

- content/cloud/putty/images/pageant-add-key.png 0 additions, 0 deletionscontent/cloud/putty/images/pageant-add-key.png

- content/cloud/putty/images/putty-connect2instance.png 0 additions, 0 deletionscontent/cloud/putty/images/putty-connect2instance.png

60.1 KiB

61.4 KiB

44.5 KiB

42.1 KiB

content/cloud/network/images/f1.png

0 → 100644

21.4 KiB

content/cloud/network/images/instance1.png

0 → 100644

78 KiB

content/cloud/network/images/ipv6_attach.png

0 → 100644

58 KiB

203 KiB

250 KiB

125 KiB

content/cloud/network/images/r1.png

0 → 100644

20.2 KiB

content/cloud/network/images/r2.png

0 → 100644

42.1 KiB

content/cloud/network/images/r3.png

0 → 100644

29.2 KiB

content/cloud/network/images/set-router1.png

0 → 100644

40.7 KiB

content/cloud/network/images/set-router2.png

0 → 100644

66.4 KiB

content/cloud/network/index.md

0 → 100644

content/cloud/news/index.md

0 → 100644

content/cloud/project-expiration/index.md

0 → 100644

69.3 KiB

302 KiB